Project highlights

- Explore standard protocols for digitising analytical imaging of specimens.

- Develop artificial intelligence and statistical analysis methods to automatically measure components of plant phenotype (e.g. morphology, flowering).

- Assess the performance of AI models through a comparative analysis with manual evaluations conducted on optical images and destructive methods.

Overview

Collecting and analysing samples to measure environmental changes and understand ecosystem responses is key to Environmental Science. (Paterson et al. 2020, Smol et al. 2001). A significant portion of the analysis entails non-destructive imaging of samples, including optical, vibrational micro-spectroscopy, and X-ray fluorescence (XRF) imaging, generating substantial datasets in a relatively short timeframe. The management and analysis of ‘big data’ can be a time-consuming task, demanding expertise in fields such as image analysis and advanced statistical methods to fully extract and interpret the wealth of information it contains. Thus, there is a pressing need to automate data analysis to accelerate workflows and enhance the capacity to extract valuable information from the acquired data.

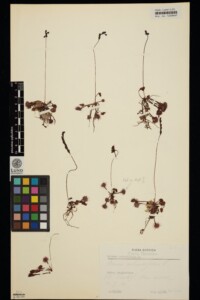

This project aims to develop artificial intelligence methods for automatic herbarium specimen analysis. We will use multiple approaches – optical, FTIR, pXR – to obtain digital photos and chemical, and morphological data on herbarium specimens collected from different locations and at different times. After the construction and labelling of the digitised herbarium images, we will finetune state-of-the-art object detection and segmentation methods such as Cascade RCNN (Cai et al. 2019), Deformable DETR (Zhu et al. 2020) and MaskRCNN (He et al. 2017) to detect the location of the petiole, leaves and ruler information in the image. Then advanced image processing methods will be developed to analyse the information on plant morphology (e.g. petiole length, leaf area, flower length, plant size, and the number of leaves).

A primary difficulty within this project is the high degree of similarity among various morphologies and the limited availability of data samples. The use of prior knowledge within pre-trained large language models is a promising solution to mitigate this challenge. To achieve this, this project will firstly make use of Convolutional Neural Networks (CNNs) to extract feature representations and further develop methods to convert them into visual tokens. These tokens will serve as inputs for pre-trained large language models (LLMs). We will explore solutions to use the outputs of LLMs as prior knowledge to improve the detection performance of existing frameworks.

Figure 1: The image shows a herbarium sheet with pressed specimens to be analysed for the project.

Host

Loughborough UniversityTheme

- Climate and Environmental Sustainability

- Organisms and Ecosystems

Supervisors

Project investigator

- Dr Haibin Cai, Loughborough University, ([email protected])

Co-investigators

Dr Jonathan Millett, Loughborough University ([email protected])

Professor Paul Roach, Loughborough University ([email protected])

Dr Nicky Nicolson, Royal Botanic Gardens Kew ([email protected])

Dr Elspeth Haston, Royal Botanic Gardens Edinburgh ([email protected])

How to apply

- Each host has a slightly different application process.

Find out how to apply for this studentship. - All applications must include the CENTA application form. Choose your application route

Methodology

There are hundreds of millions of specimens housed in libraries (herbaria) worldwide. Many are digitized and is easily accessible online, but the information contained within these images remains less accessible, as the analysis of specimens typically requires physical measurements, interpretation of in-hand or digitized specimens, or invasive methods.

This project will explore standard protocols for digitising analytical imaging of specimens, focussing on carnivorous plants. It will develop artificial intelligence and statistical analysis methods to automatically measure components of plant phenotype (e.g., morphology, flowering). Considering that the performance of existing deep learning based object detection models fails to generalise well to new data, this project will further investigate the possibility of using pre-trained LLMs (such as BERT (Jia et al. 2022) and Alpaca-7B) as prior knowledge to improve the detection performance. The effectiveness of AI models will be compared with manual evaluations conducted on optical images and destructive methods.

Training and skills

DRs will be awarded CENTA Training Credits (CTCs) for participation in CENTA-provided and ‘free choice’ external training. One CTC can be earned per 3 hours training, and DRs must accrue 100 CTCs across the three and a half years of their PhD.

The PhD candidate will have the unique opportunity to work collaboratively with the Loughborough AI research team in Computer Science for three years. They will have full access to specialist resources including laboratories, and High-Performance Computing facilities at Loughborough University and the expertise available within the Royal Botanic Gardens Kew team. The research will also provide opportunities to attend academic conferences, summer schools, and other training courses to improve technical skills. The candidate will master advanced deep learning techniques and have excellent career prospects on the successful completion of the PhD.

Partners and collaboration

Co-supervision will be by Jonathan Millett (Loughborough University), Paul Roach (Loughborough University), Nicky Nicolson (Royal Botanic Gardens Kew) and Elspeth Haston (Royal Botanic Garden Edinburgh [RBGE]), taxonomic experts on with long history of working with herbarium collections. Kew and RBGE house major herbaria (RBG Kew, 2024; RBGE 2022), between them holding the world’s largest number of herbarium collections, making them key to this project’s success. Access to the facilities at both sites will be open for the duration of the project although the student should expect to spend most of their time in Loughborough.

Further details

For further information about this project, please contact Dr. Haibin Cai (h[email protected]), www.lboro.ac.uk/departments/compsci/staff/haibin-cai/.

To apply to this project:

- You must include a CENTA studentship application form, downloadable from: CENTA Studentship Application Form 2025.

- You must include a CV with the names of at least two referees (preferably three) who can comment on your academic abilities.

- Please submit your application and complete the host institution application process via: https://www.lboro.ac.uk/study/postgraduate/apply/research-applications/ The CENTA Studentship Application Form 2025 and CV, along with other supporting documents required by Loughborough University, can be uploaded at Section 10 “Supporting Documents” of the online portal. Under Section 4 “Programme Selection” the proposed study centre is Central England NERC Training Alliance. Please quote CENTA 2025-LU3 when completing the application form.

- For further enquiries about the application process, please contact the School of Social Sciences & Humanities ([email protected]).

Applications must be submitted by 23:59 GMT on Wednesday 8th January 2025.

Possible timeline

Year 1

During the first year the candidate will conduct a comprehensive review of existing object detection methods and large language models. They will have regular meetings with supervisors to develop effective classification methods. Apart from these research activities, they will also attend several Loughborough Doctoral College organized courses related to academic writing, presentation, etc. Their research progress will be accessed by a six-month report, a first-year report and a review meeting with assessors.

Year 2

The candidate is expected to submit a review paper to peer review journals. They will also work on developing algorithms related to data pre-processing, image augmentation and the cascade networks to achieve good recognition accuracy. The performance of the developed models will be evaluated on different herbarium datasets.

Year 3

The candidate will continue working on the research project. They will focus on further improving the recognition accuracy by combining the developed models with strategies such as meta-learning and fine tuning.

Further reading

Cai, Z. and Vasconcelos, N., 2019. Cascade R-CNN: High quality object detection and instance segmentation. IEEE transactions on pattern analysis and machine intelligence, 43(5), pp.1483-1498.

He, K., Gkioxari, G., Dollár, P. and Girshick, R., 2017. Mask r-cnn. In Proceedings of the IEEE international conference on computer vision (pp. 2961-2969).

Jia, M., Tang, L., Chen, B.C., Cardie, C., Belongie, S., Hariharan, B. and Lim, S.N., 2022, October. Visual prompt tuning. In European Conference on Computer Vision (pp. 709-727). Cham: Springer Nature Switzerland.

Paterson, A.M., Köster, D., Reavie, E.D. and Whitmore, T.J., 2020. Preface: paleolimnology and lake management. Lake and Reservoir Management, 36(3), pp.205-209.

Royal Botanic Garden Edinburgh (2022) A leading botanical collection of approximately 3 million specimens, representing half to two thirds of the word’s flora. Available at: https://www.rbge.org.uk/science-and-conservation/herbarium/ (Accessed: 18 October 2022).

Royal Botanic Garden Kew (2024) The Herbarium | Kew

Smol, J.P., Birks, H.J. and Last, W.M., 2001. Tracking environmental change using lake sediments: Volume 4: Zoological indicators. Springer Netherlands.

Zhu, X., Su, W., Lu, L., Li, B., Wang, X. and Dai, J., 2020. Deformable detr: Deformable transformers for end-to-end object detection. arXiv preprint arXiv:2010.04159.